- #Create chatbot tutorial php how to

- #Create chatbot tutorial php install

- #Create chatbot tutorial php professional

Make two files in this project folder chat.html and chat.php.

#Create chatbot tutorial php install

This two commands above, helps us install the botman library we will use for this project using the PHP dependency manager Composer. In this project folder, lets run the command to setup the required dependencies Make a simple page index.php and draft out a html template on it. Now open this folder using your preferred text-editor. I'm using laragon, so mine goes like this Probably, I should take this tutorial in steps, So.

#Create chatbot tutorial php how to

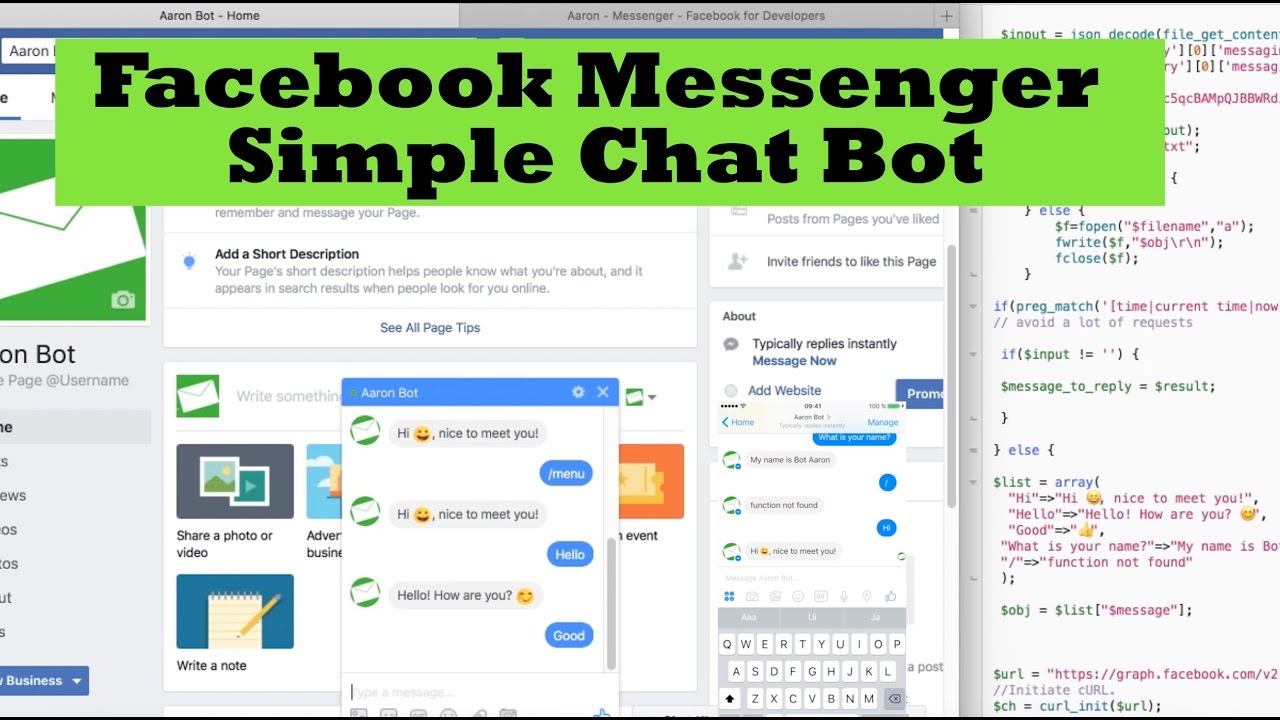

Today, together I'll show how to integrate a simple chatbot of your own, using the botman library. We all see various bots on platforms we come across with, examples are Slack bot, Telegram bot, Messenger bot. Local Server (Xammp, Wamp, Laragon or Mamp) You will learn how to setup a simple chatbot with the botman package His vision is to build an AI product using a graph neural network for students struggling with mental illness.You will get introduced to the most popular PHP chatbot framework in the world. Abid holds a Master's degree in Technology Management and a bachelor's degree in Telecommunication Engineering. Currently, he is focusing on content creation and writing technical blogs on machine learning and data science technologies.

#Create chatbot tutorial php professional

If you are looking for an even simpler solution, check out OpenChat: The Free & Simple Platform for Building Custom Chatbots in Minutes.Ībid Ali Awan ( is a certified data scientist professional who loves building machine learning models. Please share your Gradio demo in the comment section. It was fun, and I hope you have learned something. With step-by-step instructions and customizable options, anyone can easily create their chatbot. In conclusion, this blog provides a quick and easy tutorial on creating an AI chatbot using Hugging Face and Gradio in just 5 minutes. Search for the model and scroll down to see various implementations of the model. Īre you still confused? Look for hundreds of chatbot apps on Spaces to get inspiration and understand the model inference.įor example, if you have a mode that is finetuned on “LLaMA-7B”. You can now chat and interact with an app on kingabzpro/AI-ChatBot or embed your app on your website using. We just have to create a `predict` function for every different model architect to get responses and maintain history. Now, we need to create a `requirement.txt` file and add the required Python packages.Īfter that, your app will start building, and within a few minutes, it will download the model and load the model inference. You can browse Gradio Theme Gallery to select the theme according to your taste. Moreover, I have provided my app with a customized theme: boxy_violet. (response, response) for i in range(0, len(response) - 1, 2) # print('decoded_response->'+str(response)) # convert the tokens to text, and then split the responses into lines # append the new user input tokens to the chat historyīot_input_ids = torch.cat(, dim=-1)īot_input_ids, max_length=4000, pad_token_id=tokenizer.eos_token_id

Input + tokenizer.eos_token, return_tensors="pt"

Model = om_pretrained("microsoft/DialoGPT-large") Tokenizer = om_pretrained("microsoft/DialoGPT-large") From transformers import AutoModelForCausalLM, AutoTokenizerĭescription = "A State-of-the-Art Large-scale Pretrained Response generation model (DialoGPT)"

0 kommentar(er)

0 kommentar(er)